Bring Characters to Life

LipsyncFlow transforms static images into dynamic, speaking characters with incredibly realistic lip movements. Whether you're creating content for entertainment, education, or business—our AI-powered lipsync technology delivers high-quality results.

Simply provide a character image, voice sample, and dialogue text, and watch as LipsyncFlow generates seamless video where your character speaks with natural lip movements and facial expressions.

Quick & Easy Installation

See how simple it is to get started with LipsyncFlow!

StreamTeem's fourth app (LipsyncFlow) provides easy-to-use AI actor video rendering.

What is LipsyncFlow?

Two AIs combined into one by a workflow job processing app.

One AI converts text you type into spoken speech using similar style and tone of any voice sample you provide.

The second AI takes a starting image of a character and then makes that character speak the audio output from the first AI.

Installing a series of python apps which rely on libraries that are not compatible with each other can make even experienced techies give up on trying to run todays advanced AI models at home.

Getting these AI models to work on consumer GPUs is even more difficult.

The model files are also very large e.g. 125 GB in size and download from developer sites that might disappear anytime the developer decides to take them down.

To get around these problems just make sure you have a GPU with at least 24 GB of VRAM such as a 3090, 4090, 5090 or higher, download and install LipsyncFlow, and run the application.

As long as your Windows and GPU drivers are all up to date you are now ready to create AI actors saying whatever you choose.

How LipsyncFlow Works

Watch this animated demonstration of the AI lipsync generation process

LipsyncFlow Intro Video

Powerful Features

🎭 Character Management

Define characters with default images and voice print audio files. Each character can be reused across multiple videos with consistent appearance and voice.

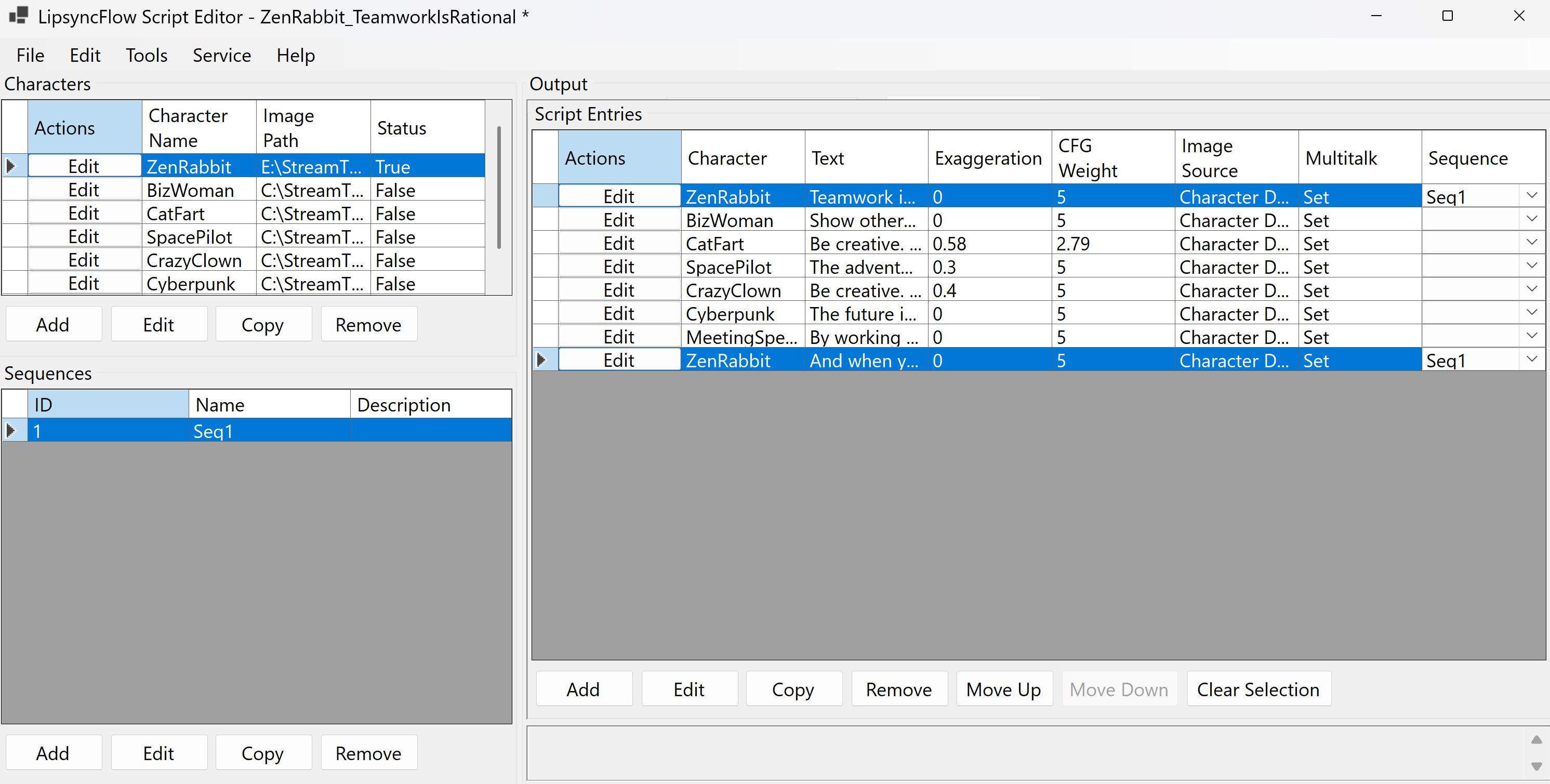

📝 Script Editor

Create and manage dialogue scripts with our intuitive interface. Split long text automatically and configure TTS parameters for optimal results.

🎞️ Sequence Organization

Group related script entries into sequences and apply FFmpeg filters for high quality video processing and effects.

🤖 AI-Assisted Editing

Generate comprehensive AI prompts to edit scripts using external AI chat interfaces, then import the results back seamlessly.

⚙️ Advanced Controls

Fine-tune video generation with advanced parameters including exaggeration, CFG weight, and audio guide scaling for perfect lipsync.

💾 File Management

Save, load, export, and import scripts in various formats including native .lipsync and JSON for maximum compatibility.

High Quality Results

LipsyncFlow uses state-of-the-art AI models including MultiTalk for video generation and Chatterbox for voice cloning. Make as many videos as you like and feel free to experiment without the need to pay for each video rendered because you run the AI on your own GPU on your own Windows PC (24 GB+ VRAM required).

Advanced features include frame continuity between clips, customizable video prompts for emotional expression, and comprehensive FFmpeg integration for post-processing effects.

How It Works

- Create Characters → Define your AI actors with images and voice samples

- Write Scripts → Add dialogue entries with text, TTS parameters, and video prompts

- Configure Settings → Adjust exaggeration, CFG weight, and other parameters

- Process Videos → Submit to the LipsyncFlow service running on your own PC for AI rendering

- Export Results → Receive high-quality videos with high quality lipsync

📚 Help & Documentation

Get detailed guidance on using LipsyncFlow with our comprehensive help system. Whether you're just starting out or looking to master advanced features, we have the resources you need.

🎛️ Main Form Guide

Learn how to navigate the main interface, manage job queues, and access core tools for video generation workflow.

View Guide📝 Script Editor Guide

Master the script editor for creating characters, managing dialogue, configuring TTS parameters, and organizing sequences.

View Guide🎬 FFmpeg Editing Guide

Learn how to create and manage FFmpeg filter chains for video processing, effects, and post-production enhancements.

View Guide🚀 Quick Start Workflow

- Start Services: Launch the Service Admin to initialize AI rendering services

- Create Characters: Define AI actors with images and voice samples (voice print audio should be less than 10 seconds with 1 second of silence)

- Write Scripts: Add dialogue entries (keep under 30 seconds when spoken for optimal quality)

- Configure Settings: Adjust exaggeration (0.0-1.0) and CFG weight (0.5-5.0) for emotional intensity and pacing

- Apply Effects: Use FFmpeg filters for video enhancement and post-processing

- Submit Jobs: Send scripts to the LipsyncFlow service for AI rendering

- Monitor Progress: Track job status in real-time through the job queue

💡 Pro Tips for Best Results

- Character Images: Use wide shots to avoid AI background generation inconsistencies

- Voice Quality: Clean audio recordings without background noise for optimal voice cloning

- Video Length: Shorter videos (5-15 seconds) render faster with fewer artifacts

- Text Splitting: Use automatic text splitting for longer dialogue to create manageable segments

- AI-Assisted Editing: Generate comprehensive prompts for external AI chat interfaces to enhance scripts

- Frame Continuity: Use "Previous Last Frame" option for seamless transitions between clips

See It In Action

Show others your dreams and tell a story

Be creative, be funny.

The future is what we make of it together. Come join us!

Working together as a team we can find the features hiding in our imaginations.

Adventures in your mind can be shown to others. Fight hard!

Teamwork is rational. Join us at StreamTeem as a Story Runner!

Professional character speaking with natural expressions

Fantasy creature with majestic voice and presence

Whimsical character bringing joy and wonder

Classic western character with authentic personality

Extended dialogue showcasing natural conversation flow

System Requirements & Performance

Hardware Requirements

- GPU: NVIDIA GPU with at least 24 GB of VRAM (recommended: RTX 4090 or similar)

- RAM: 96 GB system RAM recommended

- Storage: SSD with at least 200 GB free space

- CPU: Windows PC with aModern multi-core processor (Intel i7/AMD Ryzen 7 or better)

Performance Expectations

- Rendering Speed: On a 4090 GPU, a 5-second video takes approximately 1.5 minutes per second of video (7.5 minutes total)

- Quality vs. Speed: Shorter videos render faster with better quality and fewer artifacts

- Maximum Length: Individual script entries should be less than 30 seconds when spoken

- Resolution: Default testing at 480×832 resolution; higher resolutions require significantly more VRAM

- Memory Scaling: Longer videos and higher resolutions require exponentially more VRAM

AI Model Details

- Video Generation: Powered by MultiTalk for realistic lip movements and facial expressions

- Voice Cloning: Uses Chatterbox for high-quality text-to-speech and voice replication

- Frame Continuity: Advanced features maintain visual consistency between video clips

- Customizable Prompts: Fine-tune emotional expressions and character behavior

- FFmpeg Integration: Comprehensive post-processing capabilities for professional results

Getting Started

LipsyncFlow is available as a portable application for easy setup. Simply download and install LipsyncFlow to get started.

Story Runner members receive updates and support through the StreamTeem Discord server, while purchasers get the complete portable application with all necessary AI models and services.

Frequently Asked Questions (FAQ)

What is LipsyncFlow?

LipsyncFlow is an AI-powered application that generates realistic lipsync videos where characters speak dialogue with natural lip movements and facial expressions. It uses state-of-the-art AI models including MultiTalk for video generation and Chatterbox for voice cloning.

How do I get LipsyncFlow?

You can purchase LipsyncFlow which includes the portable application (approx 150GB of AI models and code). Story Runner members get updates and support through Discord, while purchasers get the complete standalone application.

What are the system requirements?

You need a GPU with at least 24 GB of VRAM (like an RTX 4090), 96 GB of system RAM is recommended, and a fast SSD with at least 200 GB free space for optimal performance.

Can I customize the characters and voices?

Yes! You can create custom characters with your own images and voice samples. The AI will clone voices and generate lipsync based on your provided audio. Voice print audio should be less than 10 seconds with 1 second of silence at the end for best results.

How long does video generation take?

On a 4090 GPU, a 5-second video takes approximately 1.5 minutes per second of video (7.5 minutes total). Shorter videos render faster with better quality. Max length per script entry is 30 seconds.

What file formats are supported?

LipsyncFlow supports various image formats (JPG, PNG) for characters and audio formats (WAV, MP3) for voice samples. Output videos are in standard MP4 format.

How do I manage multiple video clips?

Use sequences to group related script entries together. You can apply FFmpeg filters to individual clips or the entire sequence. The system supports frame continuity between clips for seamless transitions.

Can I use AI to help edit my scripts?

Yes! LipsyncFlow includes AI-assisted editing features. You can generate comprehensive prompts for external AI chat interfaces, then import the results back into your script for enhanced dialogue and character development.

What advanced settings can I adjust?

You can fine-tune exaggeration (0.0-1.0) for emotional intensity, CFG weight (0.5-5.0) for speech pacing, and various AI model parameters including sample steps, audio guide scale, and color correction strength.

How do I troubleshoot rendering issues?

Check the job queue for real-time status updates, validate your script before submission, ensure all file paths are correct, and monitor GPU memory usage. The system provides detailed logs and error reporting for troubleshooting.